U.S. Geological Survey Coastal and Marine Geology Program

Video and Photograph Portal

Methods Summary

Chapter 1. Introduction

Background and motivation

Access to the U.S. Geological Survey (USGS) Coastal and Marine Geology Program (CMGP) vast collection of unique and valuable seafloor and coastal imagery is available in an online portal that provides a single location for data discovery and viewing. The USGS and our research partners invest immense resources collecting, processing, and archiving seafloor and oblique coastal video and photographs. Until 2013, only a small number of these datasets were available to the public through static web interfaces, partly due to the inherent nature and size of the datasets, in particular the video data. Prior to development of the data portal, retrieving this imagery most often required internal USGS access with specific hardware and software. Furthermore, it was difficult to manage and challenging to share such a large amount of information.

A successfully launched web portal pilot project, led by USGS Coastal and Marine data managers working with USGS contractors, dramatically improved access to archived CMGP multimedia for CMGP scientists, our research partners in the scientific community, and the general public. The CMGP video and photo portal is continually updated as new data are collected, made available, or processed into digital form to preserve and disseminate the data. Scientists are using these data to verify their interpretations of sonar data, to study fine-scale seafloor and coastal morphology, to provide a framework for understanding seafloor and coastal ecosystems, and to create maps of seafloor geology and surficial-sediment distribution and habitats.

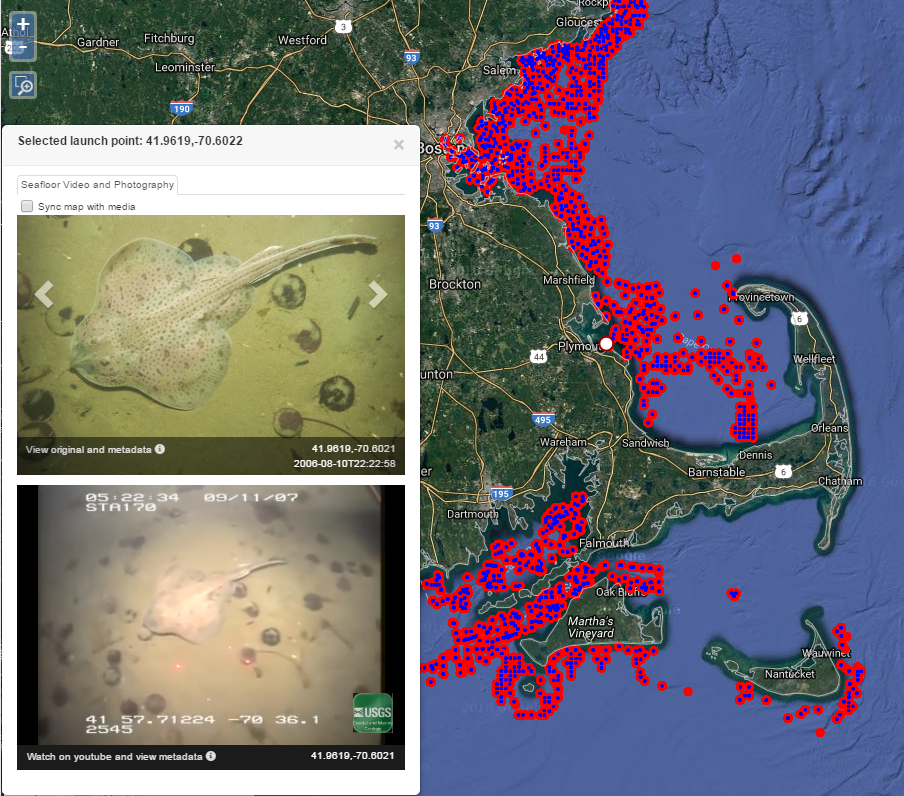

The video and photograph portal is based on an interactive map allowing users to zoom into an area of interest and find available USGS imagery. The co-located video and still photographs are displayed simultaneously on screen, along with their location on the map (fig. 1).

Figure 1. Example of CMGP Video and Photograph Portal showing map, photograph, and video for selected location (indicated by larger white dot on map).

CMGP collects imagery during surveys for a variety of purposes, including ground-truth validation of remotely sensed geophysical data, documenting change to coastal features, and understanding sediment-transport processes. Seafloor video and still images are collected using underwater sampling devices such as the USGS SEABOSS, BOBSled, and other sampling techniques. Oblique aerial imagery is collected from fixed-wing aircraft, helicopters, and unmanned drones, which are flown over coastal areas before and after storms or other events to document changes to sensitive coastal formations and structures.

Publication history

The portal was launched in 2013 as a proof-of-concept data repository and access point for imagery collected through the California Seafloor Mapping Program (doi: 10.5066/F7J1015K). Those tools and resources were further developed to create the current CMGP-wide portal (doi: 10.5066/F7JH3J7N), hosting imagery from many of the regions where CMG scientists work, including California, Oregon, Puget Sound, Hawaii, Alaska, Massachusetts, Florida, the Gulf and Atlantic Coasts, and American Samoa, as well as numerous other projects. Several press releases announcing the launch of the portal and two phases of additional uploaded media have increased awareness of the portal resulting in successful user outreach and encouragement for USGS scientists to include imagery in the portal.

Updates to the portal include imagery added in 2014, 2015, and 2016. As of 2016, the portal contains more than 163,000 images, over 4,000 video files, and more than 1,001 hours of video covering more than 41,000 km of surveyed coast and sea floor. The portal currently contains imagery spanning from 2003 to 2015. Video and photographs originally collected on analog film media have been digitized and processed, along with more recently collected digital video and photographs, to meet a common standard for all CMGP video and photographs and to enable streamlined online publication in the portal.

Chapter 2. Methods

The imagery presented in the portal must be edited, formatted, and georeferenced. In the current CMGP Video and Photograph Portal, videos are ultimately stored and streamed as embedded YouTube videos, and photographs are stored in a web hosted photo repository. Presenting the imagery in this way requires multiple processing steps and tools, including video and photo editing, database management, and computer scripting to automate processing, formatting, and quality assurance tasks (fig. 2). A robust set of processing tools have been developed to streamline and automate portions of the workflow based on the wide range of data types processed so far. However, sometimes the data received are uniquely organized and formatted, requiring individualized processing for survey data to meet the portal standards. In that case processing tools are updated to accept a wider range of data formats and organizational structures.

Figure 2. Example workflow for obtaining, processing and publishing imagery to the portal.

Formatting

After video and/or photographic imagery is acquired from its source (the principal investigator of a survey, survey technicians, archives, or other USGS databases), the imagery is manipulated and reviewed to ensure it is “portal-ready.” Videos and photographs containing extraneous frames, such as boat deck shots, extensive water column sequences, blank or distorted imagery from equipment malfunction, and other content not directly relevant to seafloor and coastal understanding is removed. The imagery must also be edited to meet proper file formatting and resolution for its publication in the portal. Raw video files recorded during surveys may be too large and/or an unsuitable format for web-streaming. Video are compressed to a standard codec (for example, MPEG-4) and uploaded in a suitable container (for example, MP4) so that the videos meet the necessary YouTube specifications (Google, 2016). Compressing the video reduces the file size, thus making web-streaming feasible, while only slightly reducing the quality of the original video recording.

Other video processing steps may include stripping audio tracks and creating video text overlays displaying survey information such as survey ID, date, and sample-station ID. Older video collected on film is converted to digital format to enable it to be uploaded to the portal. Video stored in DVD format, which contains multiple different files (such as VOB files) for a single video, is reformatted to a single video file with the standard codec and container.

File type reformatting is generally not required for photographs, as web hosting platforms for photographs accept a wide range of standard image file formats and sizes. Photographs displayed in the portal are embedded with metadata tags, including exchangeable image file format (Exif) metadata tags. These metadata can be used to identify, locate, and provide contact information for an image. The standard metadata embedded in the portal’s photographs includes the Exif tags ImageDescription, Artist (often the survey’s principal investigator) and Copyright; the GPS tags TimeStamp, DateStamp, Latitude, Longitude, LatitudeRef and LongitudeRef; the JPEG Comment tag; the PTC tags Credit, Contact, CopyrightNotice, Keywords and Caption-Abstract; and the XMP tag Caption. Some fields may be automatically populated during collection by the equipment (for example, the GPS tags, as well as digital camera and file information), and all the fields can be populated using a set of imagery-formatting tools described below. Photosets uploaded to the portal prior to the establishment of these standards may lack some of the metadata, but whenever possible the photos were updated to contain at least enough metadata to direct to more information about the photoset.

Imagery-formatting tools

Multiple tools are used to edit, format, review, and upload imagery to the portal. These include video and photograph editing software, command-line tools, and computer scripts.

Editing, formatting and compressing videos is accomplished using command-line functionality from the free software project FFmpeg (software available at https://www.ffmpeg.org, last accessed August 1, 2016). The software meets most processing requirements for the videos, and can trim extraneous video frames, remove or edit audio tracks, create text overlays, cut a single video into multiple videos, concatenate videos, as well as convert codecs, create containers, filter, and compress video as required by the data.

ExifTool, another free software project (software available at http://owl.phy.queensu.ca/~phil/exiftool/, last accessed July 27, 2015), provides command-line functionality to view and edit a photograph’s Exif metadata tags. For this purpose, the ExifTool has been incorporated into Python scripts (software available at https://www.python.org, last accessed July 27, 2015) allowing large numbers of photographs to be edited with data from spreadsheets containing geographic coordinates, citations, and summary text (Appendix). Other Python computer scripts have been written that also utilize ExifTool, FFmpeg, as well as standard operating system tools, to edit, name, catalog and organize video files.

Navigation records

Navigation data provide the basis for visualizing the CMGP video and photograph imagery in the portal. Accurate geographic coordinates, combined with date and time information, are required to display data on the portal’s interactive map. These coordinates are generally acquired from a survey’s GPS navigation records. Every data point on the map represents a time value along a survey trackline, which also has an associated date, latitude, longitude, video (if applicable) and photograph (if applicable). Each data point can be associated with a video and photograph, or just one of the imagery types if the other does not exist (but at least one is required). The imagery is tied to the navigation records using time as a common field. The portal uses this record to display, for each coordinate containing imagery, the corresponding video and/or photograph, as linked by their YouTube file ID and/or web-hosted photo album ID and image ID. This master navigation file is created for each survey containing imagery uploaded to the portal.

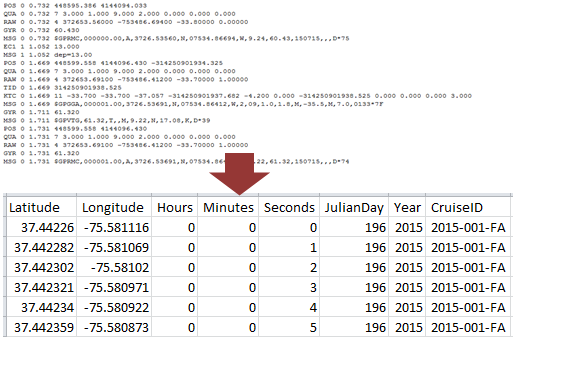

Multiple Python scripts are used to reformat GPS navigation records depending on their source and original format. USGS online databases contain navigation records for some surveys, while other navigation data are provided by science projects in various formats. A Python script reformats these records into the standard format used by the portal, containing date, time, latitude and longitude fields, as well as survey identification fields (Appendix). This process requires parsing combined date-time values into separate date and time values, converting coordinates to decimal-degree format, as well as organizing the other values into spreadsheet format.

One common format for navigation records is the National Marine Electronics Association (NMEA) GPS string, as collected using HYPACK navigation hardware, for example. A Python script parses and reformats complex NMEA strings into a standard format with date, time, latitude, and longitude formats (fig. 3; Appendix). Optionally, the script can be run to group or batch reformat numerous files collected over multiple survey dates, thus expediting processing times.

Figure 3. Example of NMEA GPS data parsed and reformatted as a spreadsheet. Navigation records are reformatted as necessary, in a similar method, depending on how they are originally recorded, to ensure the data are compatible with the portal’s processing steps.

Some videos (those collected using proprietary hardware) contain NMEA GPS strings encoded as an audio track in the video file. Because the audio encoding is proprietary, specific commercial software must be used to decode the audio track into a spreadsheet. A Python script is used to reformat the resulting spreadsheets into the required standard navigation spreadsheet format (Appendix). Other unique formats for navigation records that are encountered are treated in a similar method to produce the standard output format needed for “portal-readiness.”

Other scripts are used to match imagery files with navigation records, resulting in a final spreadsheet containing date, time, latitude, longitude, video and/or photograph file fields. An R script (software available at https://www.r-project.org/, last accessed August 18, 2016) creates a list of video start and end times to select only the portions of the navigation record that are associated with video based on the common time data field (Appendix). An additional R script reviews the resulting imagery navigation spreadsheet to ensure the length of navigation matches the length of the imagery, so that the duration of the navigation record matches the duration of the video (Appendix).

Uploading

After navigation records are compiled and completed for all imagery, the photographs and videos are uploaded to the portal. Additional structured query language (SQL) scripts then create a final spreadsheet record containing internet links to imagery (for example, YouTube IDs and web hosted photo album ID and image IDs), latitude, longitude, and the number of seconds into a video for every row in the record (Appendix). This final record with imagery and location is shared with the contractors, and the data is added to the interactive portal. Additionally, metadata and a summary of the survey with links to publications and funding programs are uploaded for each YouTube video, and a summary of the data with links is added to the portal’s data catalog web pages. Surveys in the portal’s data catalog are organized and grouped according to geographic regions and survey themes.

Chapter 3. Future work

Imagery data are processed and added to the portal as they are made available, either by initial collection during a survey, accessing archived digital data, or by digitizing analog data. After high-priority data, such as those that are high-profile or most recently collected, are acquired and added to the portal, it is possible to process and upload older data stored at USGS on DVD and film. Adding these legacy data to the portal enlarges the geographic and temporal scope of data displayed in the portal, thus increasing access to the USGS’s immense catalog and historical collection of marine and coastal imagery. The goal is to incorporate all existing and future CMGP imagery into this video and photo portal.

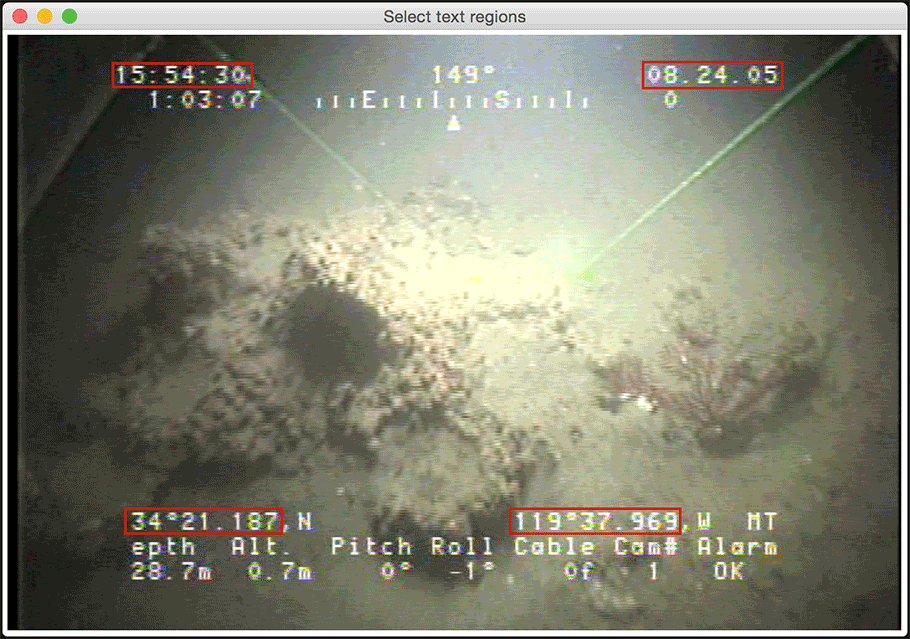

Additional tools are under development to increase processing efficiency and types of imagery available to the portal. One of these tools is the video optical character recognition (OCR) software (Appendix). This software uses OCR technology to “read” text overlays off of videos, converting what was only human-readable text into computer-readable data, and thereby can create digital navigation records for the video surveys where they are otherwise unavailable (fig. 4). The software is bundled in a Python script and utilizes multiple Python packages and command-line tools, including FFmpeg and ImageMagick (available at http://www.imagemagick.org, last accessed March 28, 2016), and provides a graphical user interface allowing the user to select text overlay field locations on the video frame and define the type of data contained (for example, latitude, time). There are limitations to this capability, depending on video quality and the style of text overlay, but improvements to the software are increasing its accuracy, and videos lacking external navigation records or videos with navigation records that are too sparse may become available for display in the portal.

As a proof of concept, a subset of other associated data including geophysical basemap data layers from California were included with the launch of the portal in GIS base layers offshore of Santa Barbara Channel are included. GIS spatial data layers in order to display seafloor morphology and character, identify potential marine benthic habitats, and illustrate surficial seafloor geology and shallow subsurface geology.

Figure 4. Example text overlays (framed in red) on a video frame processed by OCR software. The red frames are a feature of the software’s GUI, allowing the reader to select the pixel location of the desired text fields from among others. Clockwise from top-left, fields contain time, date, longitude, and latitude data.

In an effort to to move away from proprietary audio GPS encoding systems, less expensive hardware and software that accomplishes a similar goal is being incorporated into data collection and processing. A circuit manufactured by Intuitive Circuits, LLC encodes GPS NMEA strings into a video’s audio channel. This is similar to the proprietary hardware’s capabilities, but at a fraction of the cost. The device can also decode its own signal, but a software decoder is under development so that the audio can be decoded using a computer without requiring the hardware itself. Videos embedded with GPS data in this way may improve the portability and accuracy of navigation records, allowing them to be portal-ready more quickly and efficiently.

References

Google, 2016, YouTube help center: accessed August 1, 2016 at https://support.google.com/youtube.

Appendix

Scripts described in this summary document were primarily created for a specific portal processing purpose. These scripts are listed below in order of reference as links to a publicly-available GitHub repository. The scripts were developed by USGS and others; author credits appear in each individual scripts.